Courtesy of wikipedia.org

Predicting the future is difficult. Who could have foreseen the pandemic in 2020? Or the insurrection on January 6 of this year?

Even so, people do make a living as prognosticators. Land use planners are among them. They attempt to predict human migrations, for example– urban flight, urban renewal, the growth of suburbia. These shifts are based on economics. Sometimes it is better to live in a city. Sometimes it isn’t.

Planners are scratching their heads at the moment. The pandemic forced some folks to do their jobs at home. That meant fewer commutes, fewer people having lunch near their offices, fewer workers shopping downtown. Much of that lost revenue went to the suburbs. Now, developers are struggling to accommodate the shift in urban/suburban lifestyles. (The Rise of the Zoom Town,” by Aarian Marshall, Wired, June/July 2021, pgs. 28-29)

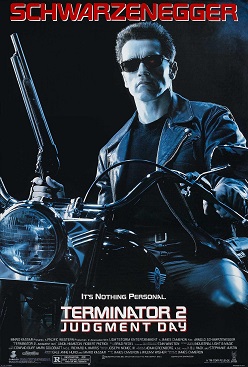

Similarly, military planners try to predict the future of war. Will robots have a role? If so, how much data will artificial intelligence need to make decisions formerly made by humans? (“Trigger Warning,” by Will Knight, Wired, June/July 2021, pgs. 29-20) When I wrote about robot usurpation earlier, I was unaware of the degree to which these plans were underway.

To envision the future, the military relies not only upon Google’s data but also upon its search engine which uses large language models. The prototype was BERT. Now there is GPT-3. GPT-3 is vast, making it impossible for humans to know what’s been collected: the good, bad, or the ugly. To be socially responsible, Google created research teams to determine if unsorted data impacts what we think we know.

One team discovered unsorted data collection seemed to have a racial bias and wrote a paper on the issue. “On the dangers of Stochastic Parrots: Can Language Models Be Too Big?” Their suggestion was to curate information so that historical statistics were balanced against modern trends.

Google’s higherups balked at their conclusion. People who proposed the idea lost their jobs. (“The Exile,” by Tom Simonite, Wired, June/July 2021 pgs. 115-127) But other experts outside the company were more sanguine. They thought curating data was a good idea.

Congress cast a sleepy on the question but did nothing. So, after the kerfuffle, with the dissenters disappeared, nothing much changed. Instead, one government agency, the National Commission on Artificial Intelligence, defended GPT-3 indirectly when it called upon Congress to resist calls for a ban on autonomous weapons. Robots and drones rely upon that system.

Ironically, the agency’s recommendation runs contrary to the Defense Departments’ 2012 policy on autonomous weapons. While it “… did not explicitly mandate that a soldier intervenes in every decision,” it did assume a human was informed. (“Trigger Warning,” by Will Knight, Wired, June/July 2021, pg. 31)

Says one expert, a change that takes humans out of the loop would be crossing an “ethical Rubicon.” (Ibid. pg. 31.) The policy he recommends is that world leaders ban autonomous weapons as they did for biological weapons.” (Ibid, pg. 31.) Otherwise, once the technology exists, he warns, criminals and terrorists will put the technology to subversive uses.

Congress and the public need to become “woke “about the debate on autonomous weapons. We are about to unleash machines into the world that operate without human oversight. As a species, can we be sure we are evolved enough to play with the toys we are creating?